Click here and press the right key for the next slide.

(This may not work on mobile or ipad. You can try using chrome or firefox, but even that may fail. Sorry.)

also ...

Press the left key to go backwards (or swipe right)

Press n to toggle whether notes are shown (or add '?notes' to the url before the #)

Press m or double tap to slide thumbnails (menu)

Press ? at any time to show the keyboard shortcuts

Operationalising Moral Foundations Theory

Operationalising Moral Foundations Theory

[email protected]

2

‘Moral-foundations researchers have investigated the similarities and differences in morality among individuals across cultures (Haidt & Josephs, 2004). These researchers have found evidence for five fundamental domains of human morality’

Feinberg & Willer, 2013 p. 1

There may be cultural variations on what is, and what isn’t, an ethical issue.

So we can’t assume in advance that we know for sure what is ethical and what isn’t.

But if we don’t know what is ethical and what isn’t, how can we study cultural variations in it?

Moral Foundations Questionnaire

When you decide whether something is right or wrong, to what extent are the following considerations relevant to your thinking?

... whether or not someone was harmed?

... whether or not someone suffered emotionally

... whether or not someone did something disgusting

... whether or not someone did something unnatural or degrading

Graham et al, 2009

Basic requirements

- internal validity (roughly, do answers to each category of questions appear to reflect a single and distinct underlying tendency)

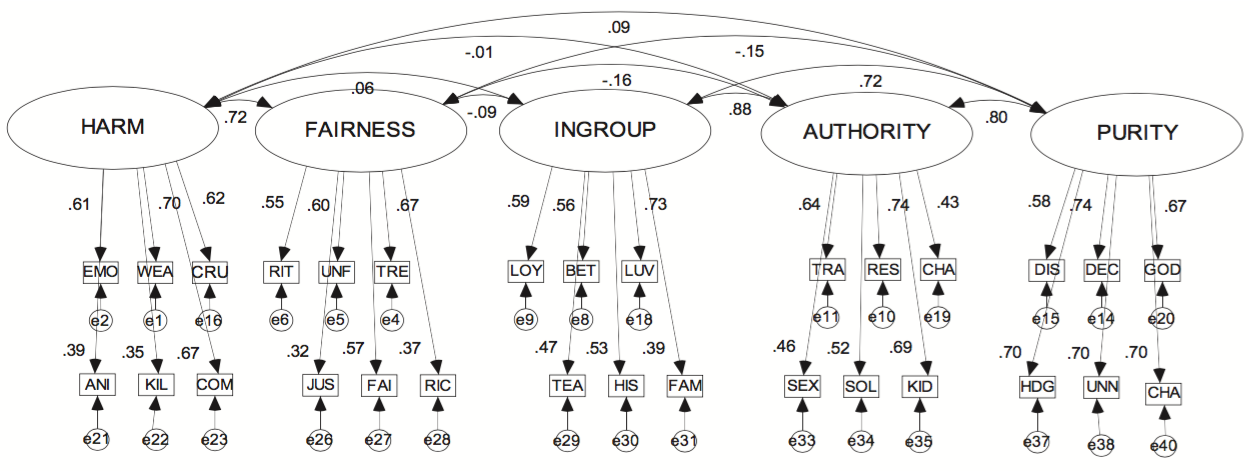

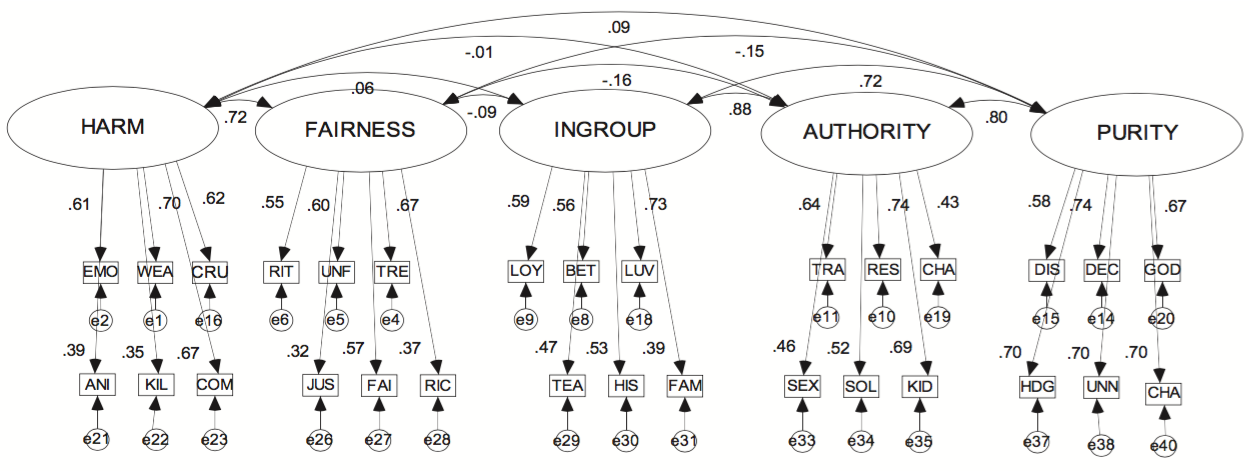

Graham et al, 2011 figure 3 (part)

Confirmatory factor analysis

observed variables : answers to individual MFQ questions

latent factors : the five moral primitives

Graham et al, 2011 figure 3 (part)

‘The five-factor model fit the data better (weighing both fit and parsimony) than competing models, and this five-factor representation provided a good fit for participants in 11 different world areas.’

Graham et al, 2011 p. 380

‘[...] empirical support for the MFQ for the first time in a predominantly Muslim country. [...] the 5-factor model, although somewhat below the standard criteria of fitness, provided the best fit among the alternatives.

Yilmaz et al, 2016 p. 153

Basic requirements

- internal validity (roughly, do answers to the three questions appear to reflect a single underlying tendency)

- Test–retest reliability (are you as an individual likely to give the same answers at widely-spaced intervals? Yes (37 days)! Graham et al., 2011, p. 371)

- external validity (relation to other scales)

‘each foundation was the strongest predictor for its own conceptually related group of external scales’ (Graham et al., 2011, p. 373)

There may be cultural variations on what is, and what isn’t, an ethical issue.

So we can’t assume in advance that we know for sure what is ethical and what isn’t.

But if we don’t know what is ethical and what isn’t, how can we study cultural variations in it?

Does MFT answer this question?

fieldwork -> hypothetical model -> CFA -> revise model -> ...

Basic requirements

- internal validity (roughly, do answers to the three questions appear to reflect a single underlying tendency)

- Test–retest reliability (are you as an individual likely to give the same answers at widely-spaced intervals? Yes (37 days)! Graham et al., 2011, p. 371)

- external validity (relation to other scales)

‘each foundation was the strongest predictor for its own conceptually related group of external scales’ (Graham et al., 2011, p. 373)

- measurement invariance (for cross-cultural comparison)

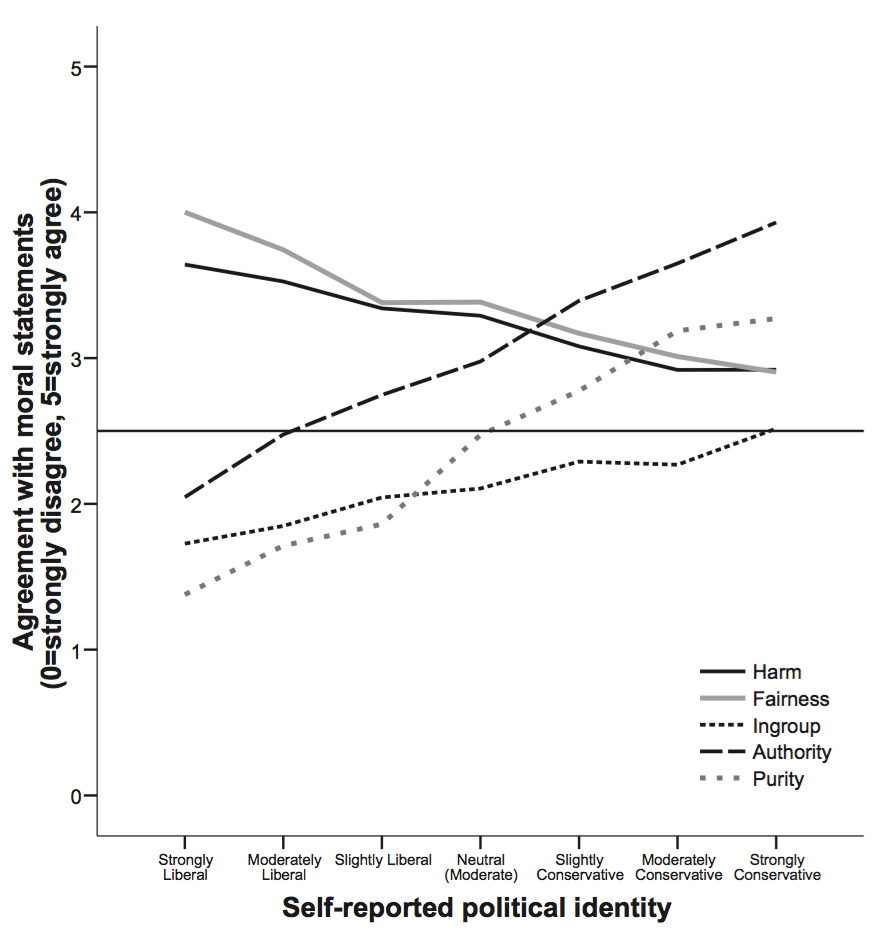

Graham et al, 2009 figure 3

Does this reflect

merely differences in how people interpret the questions

or substantial differences in their moral foundations?

‘A finding of measurement invariance would provide more confidence that use of the MFQ across cultures can shed light on meaningful differences between cultures rather than merely reflecting the measurement properties of the MFQ’

Iurino & Saucier, 2018 p. 2

metric invariance - you can compare variances

scalar invariance - you can compare means (eg ‘conservatives put more weight on purity than liberals’)

Does Moral Foundations Theory provide a model that is invariant?

Davis et al. (2016): metric but not scalar invariance for Black people vs White people

Atari, Graham, & Dehghani (2020): scalar non-invariance for US vs Iranian participants

Doğruyol, Alper, & Yilmaz (2019): metric non-invariance for WEIRD/non-WEIRD samples

‘although the same statements tap into the same moral foundations in each case, the strength of the link between the statements and the foundations were different in WEIRD and non-WEIRD cultures’ (Doğruyol et al., 2019).

‘there were problems with scalar invariance, which suggests that researchers may need to carefully consider whether this scale is working similarly across groups before conducting mean comparisons’ (Davis et al., 2016, p. e27).

Basic requirements

- internal validity (roughly, do answers to the three questions appear to reflect a single underlying tendency)

- Test–retest reliability (are you as an individual likely to give the same answers at widely-spaced intervals? Yes (37 days)! Graham et al., 2011, p. 371)

- external validity (relation to other scales)

‘each foundation was the strongest predictor for its own conceptually related group of external scales’ (Graham et al., 2011, p. 373)

- measurement invariance (for cross-cultural comparison)

2

‘Moral-foundations researchers have investigated the similarities and differences in morality among individuals across cultures (Haidt & Josephs, 2004). These researchers have found evidence for five fundamental domains of human morality’

Feinberg & Willer, 2013 p. 1